本文实例为大家分享了python爬虫爬取淘宝商品的具体代码,供大家参考,具体内容如下

1、需求目标 :

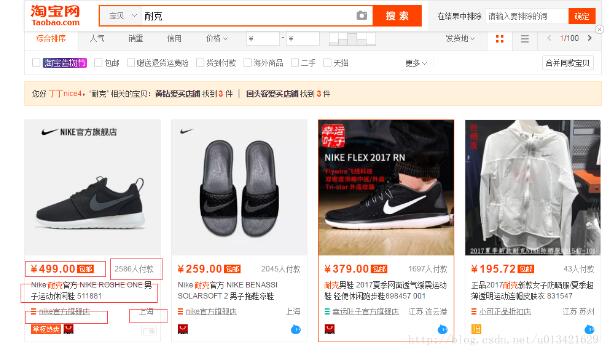

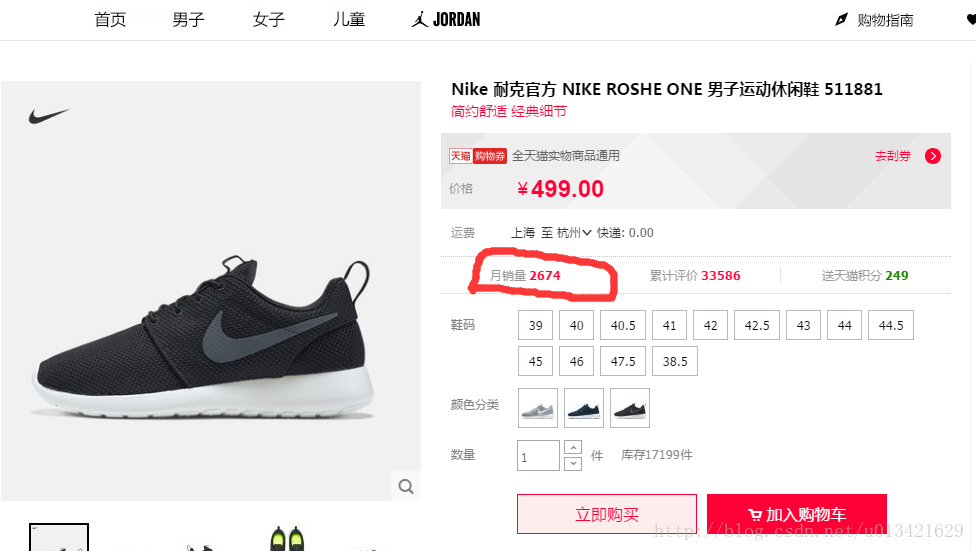

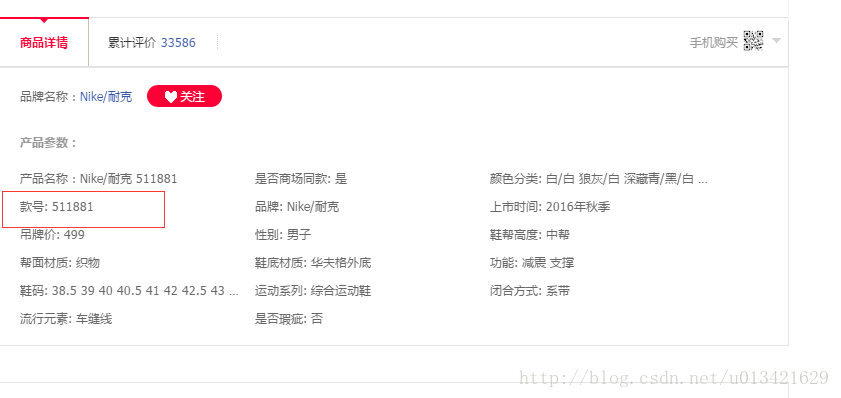

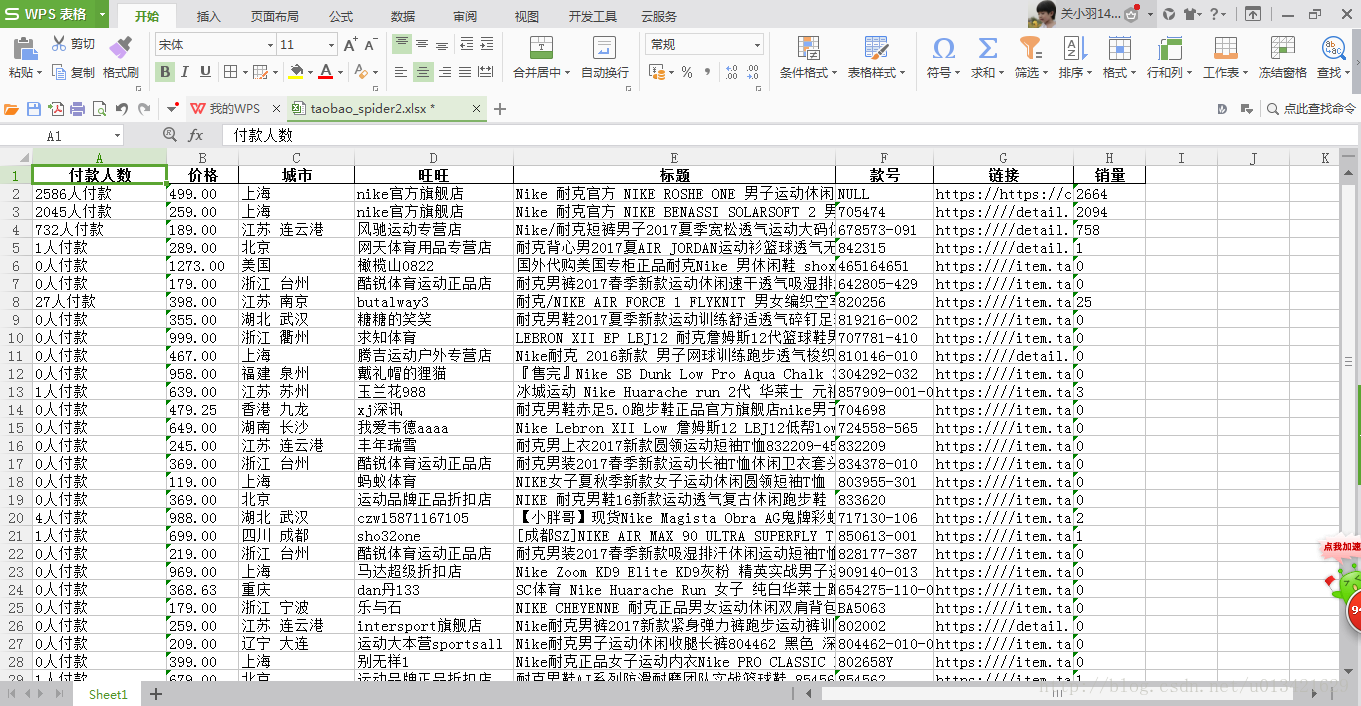

进去淘宝页面,搜索耐克关键词,抓取 商品的标题,链接,价格,城市,旺旺号,付款人数,进去第二层,抓取商品的销售量,款号等。

2、结果展示

3、源代码

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

|

# encoding: utf-8import sysreload(sys)sys.setdefaultencoding('utf-8')import timeimport pandas as pdtime1=time.time()from lxml import etreefrom selenium import webdriver#########自动模拟driver=webdriver.phantomjs(executable_path='d:/python27/scripts/phantomjs.exe')import re#################定义列表存储#############title=[]price=[]city=[]shop_name=[]num=[]link=[]sale=[]number=[]#####输入关键词耐克(这里必须用unicode)keyword="%e8%80%90%e5%85%8b"for i in range(0,1): try: print "...............正在抓取第"+str(i)+"页..........................." url="https://s.taobao.com/search?q=%e8%80%90%e5%85%8b&imgfile=&js=1&stats_click=search_radio_all%3a1&initiative_id=staobaoz_20170710&ie=utf8&bcoffset=4&ntoffset=4&p4ppushleft=1%2c48&s="+str(i*44) driver.get(url) time.sleep(5) html=driver.page_source selector=etree.html(html) title1=selector.xpath('//div[@class="row row-2 title"]/a') for each in title1: print each.xpath('string(.)').strip() title.append(each.xpath('string(.)').strip()) price1=selector.xpath('//div[@class="price g_price g_price-highlight"]/strong/text()') for each in price1: print each price.append(each) city1=selector.xpath('//div[@class="location"]/text()') for each in city1: print each city.append(each) num1=selector.xpath('//div[@class="deal-cnt"]/text()') for each in num1: print each num.append(each) shop_name1=selector.xpath('//div[@class="shop"]/a/span[2]/text()') for each in shop_name1: print each shop_name.append(each) link1=selector.xpath('//div[@class="row row-2 title"]/a/@href') for each in link1: kk="https://" + each link.append("https://" + each) if "https" in each: print each driver.get(each) else: print "https://" + each driver.get("https://" + each) time.sleep(3) html2=driver.page_source selector2=etree.html(html2) sale1=selector2.xpath('//*[@id="j_detailmeta"]/div[1]/div[1]/div/ul/li[1]/div/span[2]/text()') for each in sale1: print each sale.append(each) sale2=selector2.xpath('//strong[@id="j_sellcounter"]/text()') for each in sale2: print each sale.append(each) if "tmall" in kk: number1 = re.findall('<ul id="j_attrul">(.*?)</ul>', html2, re.s) for each in number1: m = re.findall('>*号: (.*?)</li>', str(each).strip(), re.s) if len(m) > 0: for each1 in m: print each1 number.append(each1) else: number.append("null") if "taobao" in kk: number2=re.findall('<ul class="attributes-list">(.*?)</ul>',html2,re.s) for each in number2: h=re.findall('>*号: (.*?)</li>', str(each).strip(), re.s) if len(m) > 0: for each2 in h: print each2 number.append(each2) else: number.append("null") if "click" in kk: number.append("null") except: passprint len(title),len(city),len(price),len(num),len(shop_name),len(link),len(sale),len(number)# ## ######数据框data1=pd.dataframe({"标题":title,"价格":price,"旺旺":shop_name,"城市":city,"付款人数":num,"链接":link,"销量":sale,"款号":number})print data1# 写出excelwriter = pd.excelwriter(r'c:\\taobao_spider2.xlsx', engine='xlsxwriter', options={'strings_to_urls': false})data1.to_excel(writer, index=false)writer.close()time2 = time.time()print u'ok,爬虫结束!'print u'总共耗时:' + str(time2 - time1) + 's'####关闭浏览器driver.close() |

以上就是本文的全部内容,希望对大家的学习有所帮助,也希望大家多多支持服务器之家。

原文链接:http://blog.csdn.net/u013421629/article/details/74960278