一、前言

爬取谷歌趋势数据需要科学上网~

二、思路

谷歌数据的爬取很简单,就是代码有点长。主要分下面几个就行了

爬取的三个界面返回的都是json数据。主要获取对应的token值和req,然后构造url请求数据就行

token值和req值都在这个链接的返回数据里。解析后得到token和req就行

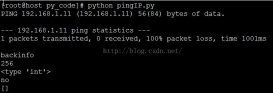

socks5代理不太懂,抄网上的作业,假如了当前程序的全局代理后就可以跑了。全部代码如下

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

|

import socketimport socksimport requestsimport jsonimport pandas as pdimport logging#加入socks5代理后,可以获得当前程序的全局代理socks.set_default_proxy(socks.socks5,"127.0.0.1",1080)socket.socket = socks.socksocket#加入以下代码,否则会出现insecurerequestwarning警告,虽然不影响使用,但看着糟心# 捕捉警告logging.capturewarnings(true)# 或者加入以下代码,忽略requests证书警告# from requests.packages.urllib3.exceptions import insecurerequestwarning# requests.packages.urllib3.disable_warnings(insecurerequestwarning)# 将三个页面获得的数据存为dataframetime_trends = pd.dataframe()related_topic = pd.dataframe()related_search = pd.dataframe()#填入自己打开网页的请求头headers = { 'user-agent': 'mozilla/5.0 (windows nt 6.1; win64; x64) applewebkit/537.36 (khtml, like gecko) chrome/89.0.4389.114 safari/537.36', 'x-client-data': 'cja2yqeiorbjaqjetskbckmdygei+mfkaqjm3sobclkaywei45zlaqioncsbgogaywe=decoded:message clientvariations {// active client experiment variation ids.repeated int32 variation_id = [3300118, 3300130, 3300164, 3313321, 3318776, 3321676, 3329330, 3329635, 3329704];// active client experiment variation ids that trigger server-side behavior.repeated int32 trigger_variation_id = [3329377];}', 'referer': 'https://trends.google.com/trends/explore', 'cookie': '__utmc=10102256; __utmz=10102256.1617948191.1.1.utmcsr=(direct)|utmccn=(direct)|utmcmd=(none); __utma=10102256.889828344.1617948191.1617948191.1617956555.3; __utmt=1; __utmb=10102256.5.9.1617956603932; sid=8afex31goq255ga6ldt9ljevz5xq7fytadzck3dgeyp2s6moxekc__hq90tttn0w-6avoq.; __secure-3psid=8afex31goq255ga6ldt9ljevz5xq7fytadzck3dgeyp2s6molu4hyhzyoaxivtahff_wng.; hsid=aelt1m_dohjy-r6sw; ssid=ajslrt0t7ngxxmtqv; apisid=3nt6oalgv8ksym2m/a2qenbmtb9p7vciwv; sapisid=iaa0fu76jzezpfk4/apws7zk1y-o74b2yd; __secure-3papisid=iaa0fu76jzezpfk4/apws7zk1y-o74b2yd; 1p_jar=2021-04-06-06; search_samesite=cgqio5ib; nid=213=oyqe35givd2drxbpy7ndaqsaeyg-if7jh_nbdsktkvmtgavv7tyesqnq_636cysbsajjp3_dkfr95w51ywk-dxvyhzpp4zll9jndbyy98vd_xeggoeleevpxihnxuav6h24ovt_edogfksjtpwkn4qaoioerhcviyvozrvgf7m4scupppmxn-h9dwm1nrs15i3b_e-iflq0lgd9s7qrga-fruadeyuxn8t1k7l_dmtb1jke5ed_dc-_qao7ddw; sidcc=aji4qffdmik_qv41vivjf0wwmtou8yuvsqc_uevemoaqwtgi9w0w2xwwkmcufvcyis5ogrskq5w; __secure-3psidcc=aji4qfemb-gnzzlhwr4p1emofs2dhsz9zwsgngoozry2udfk4kwvmvo_srzdzrmdy7h_mwlswq'}# 获取需要的三个界面的req值和token值def get_token_req(keyword): url = 'https://trends.google.com/trends/api/explore?hl=zh-cn&tz=-480&req={{"comparisonitem":[{{"keyword":"{}","geo":"us","time":"today 12-m"}}],"category":0,"property":""}}&tz=-480'.format( keyword) html = requests.get(url, headers=headers, verify=false).text data = json.loads(html[5:]) req_1 = data['widgets'][0]['request'] token_1 = data['widgets'][0]['token'] req_2 = data['widgets'][2]['request'] token_2 = data['widgets'][2]['token'] req_3 = data['widgets'][3]['request'] token_3 = data['widgets'][3]['token'] result = {'req_1': req_1, 'token_1': token_1, 'req_2': req_2, 'token_2': token_2, 'req_3': req_3, 'token_3': token_3} return result# 请求三个界面的数据,返回的是json数据,所以数据不用解析,完美def get_info(keyword): content = [] keyword = keyword result = get_token_req(keyword) #第一个界面 req_1 = result['req_1'] token_1 = result['token_1'] url_1 = "https://trends.google.com/trends/api/widgetdata/multiline?hl=zh-cn&tz=-480&req={}&token={}&tz=-480".format( req_1, token_1) r_1 = requests.get(url_1, headers=headers, verify=false) if r_1.status_code == 200: try: content_1 = r_1.content content_1 = json.loads(content_1.decode('unicode_escape')[6:])['default']['timelinedata'] result_1 = pd.json_normalize(content_1) result_1['value'] = result_1['value'].map(lambda x: x[0]) result_1['keyword'] = keyword except exception as e: print(e) result_1 = none else: print(r_1.status_code) #第二个界面 req_2 = result['req_2'] token_2 = result['token_2'] url_2 = 'https://trends.google.com/trends/api/widgetdata/relatedsearches?hl=zh-cn&tz=-480&req={}&token={}'.format( req_2, token_2) r_2 = requests.get(url_2, headers=headers, verify=false) if r_2.status_code == 200: try: content_2 = r_2.content content_2 = json.loads(content_2.decode('unicode_escape')[6:])['default']['rankedlist'][1]['rankedkeyword'] result_2 = pd.json_normalize(content_2) result_2['link'] = "https://trends.google.com" + result_2['link'] result_2['keyword'] = keyword except exception as e: print(e) result_2 = none else: print(r_2.status_code) #第三个界面 req_3 = result['req_3'] token_3 = result['token_3'] url_3 = 'https://trends.google.com/trends/api/widgetdata/relatedsearches?hl=zh-cn&tz=-480&req={}&token={}'.format( req_3, token_3) r_3 = requests.get(url_3, headers=headers, verify=false) if r_3.status_code == 200: try: content_3 = r_3.content content_3 = json.loads(content_3.decode('unicode_escape')[6:])['default']['rankedlist'][1]['rankedkeyword'] result_3 = pd.json_normalize(content_3) result_3['link'] = "https://trends.google.com" + result_3['link'] result_3['keyword'] = keyword except exception as e: print(e) result_3 = none else: print(r_3.status_code) content = [result_1, result_2, result_3] return contentdef main(): global time_trends,related_search,related_topic with open(r'c:\users\desktop\words.txt','r',encoding = 'utf-8') as f: words = f.readlines() for keyword in words: keyword = keyword.strip() data_all = get_info(keyword) time_trends = pd.concat([time_trends,data_all[0]],sort = false) related_topic = pd.concat([related_topic,data_all[1]],sort = false) related_search = pd.concat([related_search,data_all[2]],sort = false)if __name__ == "__main__": main() |

到此这篇关于python爬虫之爬取谷歌趋势数据的文章就介绍到这了,更多相关python爬取谷歌趋势内容请搜索服务器之家以前的文章或继续浏览下面的相关文章希望大家以后多多支持服务器之家!

原文链接:https://blog.csdn.net/qq_42052864/article/details/115867602