首先,要说明的一点的是,我不想重复发明轮子。如果想要搭建hadoop环境,网上有很多详细的步骤和命令代码,我不想再重复记录。

其次,我要说的是我也是新手,对于hadoop也不是很熟悉。但是就是想实际搭建好环境,看看他的庐山真面目,还好,还好,最好看到了。当运行wordcount词频统计的时候,实在是感叹hadoop已经把分布式做的如此之好,即使没有分布式相关经验的人,也只需要做一些配置即可运行分布式集群环境。

好了,言归真传。

在搭建hadoop环境中你要知道的一些事儿:

1.hadoop运行于linux系统之上,你要安装linux操作系统

2.你需要搭建一个运行hadoop的集群,例如局域网内能互相访问的linux系统

3.为了实现集群之间的相互访问,你需要做到ssh无密钥登录

4.hadoop的运行在jvm上的,也就是说你需要安装java的jdk,并配置好java_home

5.hadoop的各个组件是通过xml来配置的。在官网上下载好hadoop之后解压缩,修改/etc/hadoop目录中相应的配置文件

工欲善其事,必先利其器。这里也要说一下,在搭建hadoop环境中使用到的相关软件和工具:

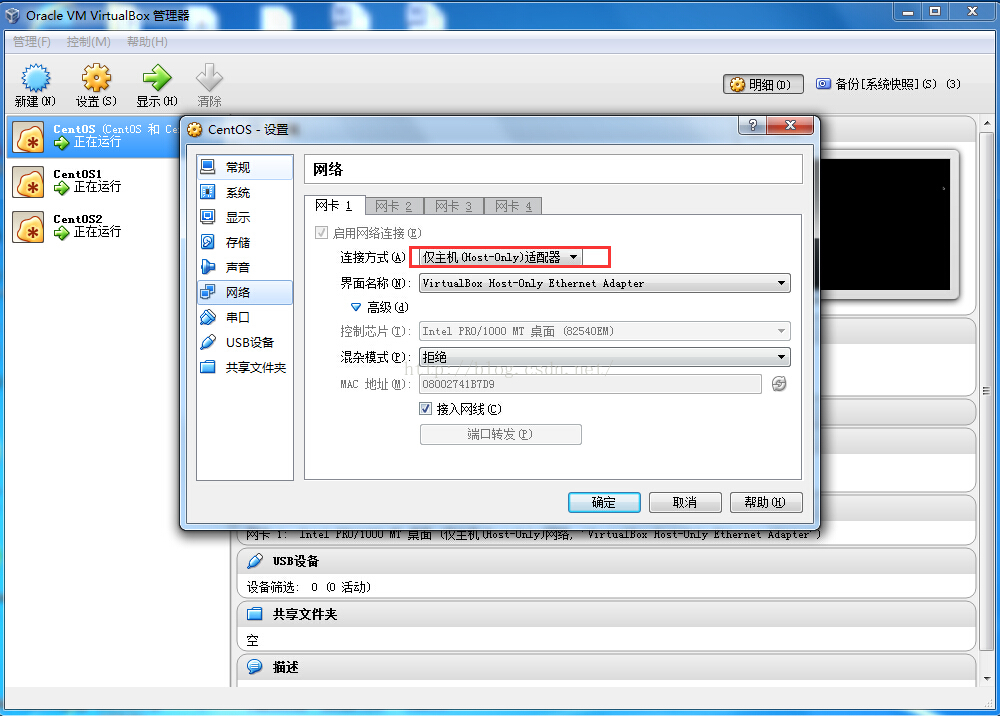

1.virtualbox——毕竟要模拟几台linux,条件有限,就在virtualbox中创建几台虚拟机楼

2.centos——下载的centos7的iso镜像,加载到virtualbox中,安装运行

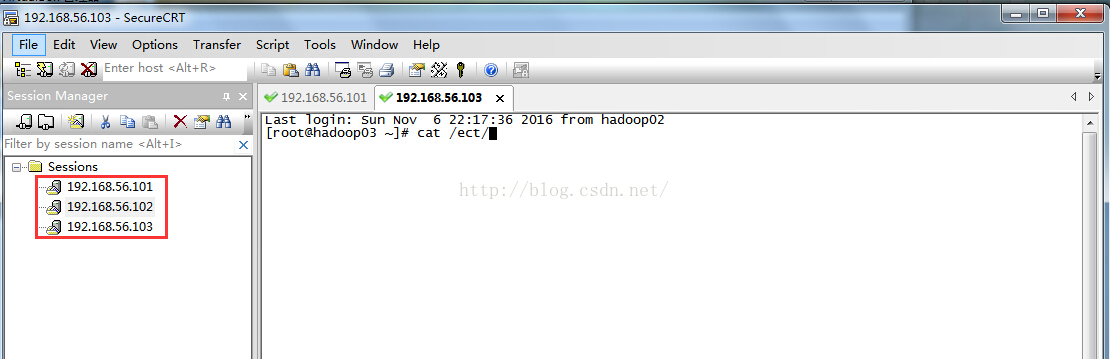

3.securecrt——可以ssh远程访问linux的软件

4.winscp——实现windows和linux的通信

5.jdk for linux——oracle官网上下载,解压缩之后配置一下即可

6.hadoop2.7.1——可在apache官网上下载

好了,下面分三个步骤来讲解

linux环境准备

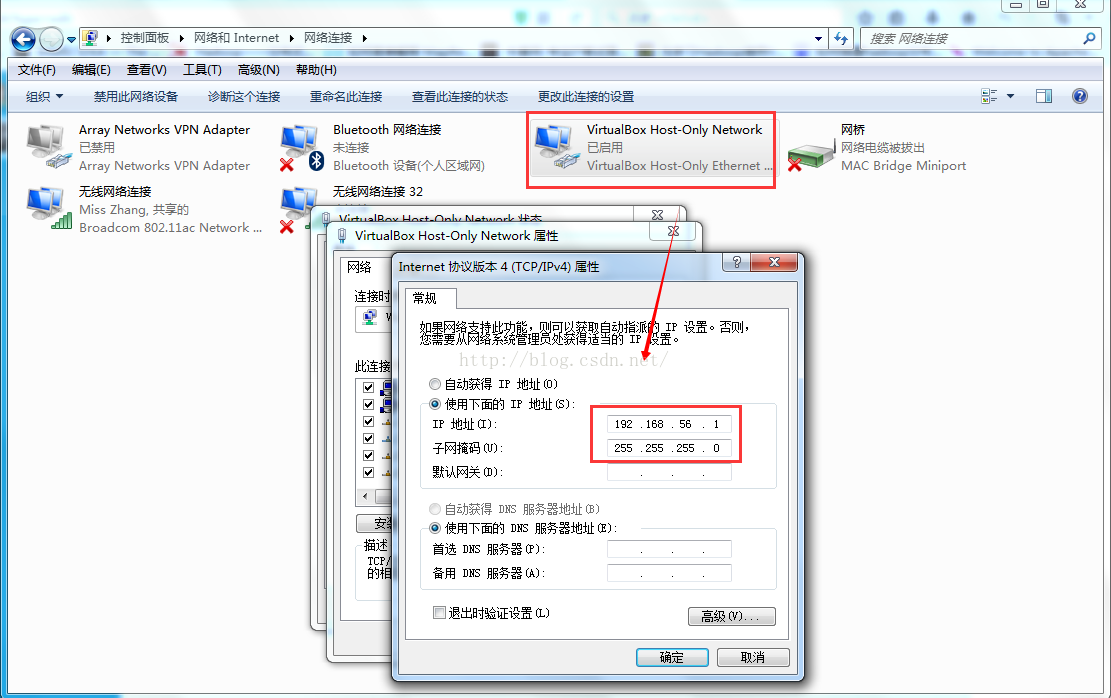

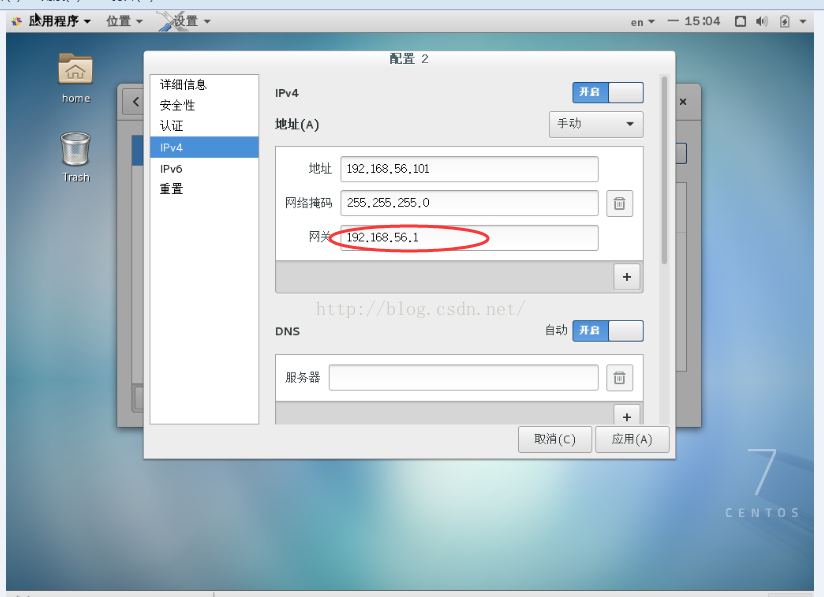

配置ip

为了实现本机和虚拟机以及虚拟机和虚拟机之间的通信,virtualbox中设置centos的连接模式为host-only模式,并且手动设置ip,注意虚拟机的网关和本机中host-only network 的ip地址相同。配置ip完成后还要重启网络服务以使得配置有效。这里搭建了三台linux,如下图所示

配置主机名字

对于192.168.56.101设置主机名字hadoop01。并在hosts文件中配置集群的ip和主机名。其余两个主机的操作与此类似

|

1

2

3

4

5

6

7

8

9

10

|

[root@hadoop01 ~]# cat /etc/sysconfig/network # created by anaconda networking = yeshostname = hadoop01 [root@hadoop01 ~]# cat /etc/hosts 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 192.168.56.101 hadoop01 192.168.56.102 hadoop02 192.168.56.103 hadoop03 |

永久关闭防火墙

service iptables stop(1.下次重启机器后,防火墙又会启动,故需要永久关闭防火墙的命令;2由于用的是centos 7,关闭防火墙的命令如下)

|

1

2

|

systemctl stop firewalld.service #停止firewallsystemctl disable firewalld.service #禁止firewall开机启动 |

关闭selinux防护系统

改为disabled 。reboot重启机器,使配置生效

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

|

[root@hadoop02 ~]# cat /etc/sysconfig/selinux # this file controls the state of selinux on the system # selinux= can take one of these three values: # enforcing - selinux security policy is enforced # permissive - selinux prints warnings instead of enforcing # disabled - no selinux policy is loaded selinux=disabled # selinuxtype= can take one of three two values: # targeted - targeted processes are protected, # minimum - modification of targeted policy only selected processes are protected # mls - multi level security protection selinuxtype=targeted |

集群ssh免密码登录

首先设置ssh密钥

|

1

|

ssh-keygen -t rsa |

拷贝ssh密钥到三台机器

|

1

2

|

ssh-copy-id 192.168.56.101 <pre name="code" class="plain">ssh-copy-id 192.168.56.102 |

|

1

|

ssh-copy-id 192.168.56.103 |

这样如果hadoop01的机器想要登录hadoop02,直接输入ssh hadoop02

|

1

|

<pre name="code" class="plain">ssh hadoop02 |

配置jdk

这里在/home忠诚创建三个文件夹中

tools——存放工具包

softwares——存放软件

data——存放数据

通过winscp将下载好的linux jdk上传到hadoop01的/home/tools中

解压缩jdk到softwares中

|

1

|

<pre name="code" class="plain">tar -zxf jdk-7u76-linux-x64.tar.gz -c /home/softwares |

可见jdk的家目录在/home/softwares/jdk.x.x.x,将该目录拷贝粘贴到/etc/profile文件中,并且在文件中设置java_home

|

1

2

|

export java_home=/home/softwares/jdk0_111export path=$path:$java_home/bin |

保存修改,执行source /etc/profile使配置生效

查看java jdk是否安装成功:

|

1

|

java -version |

可以将当前节点中设置的文件拷贝到其他节点

|

1

|

scp -r /home/* root@192.168.56.10x:/home |

hadoop集群安装

集群的规划如下:

101节点作为hdfs的namenode ,其余作为datanode;102作为yarn的resourcemanager,其余作为nodemanager。103作为secondarynamenode。分别在101和102节点启动jobhistoryserver和webappproxyserver

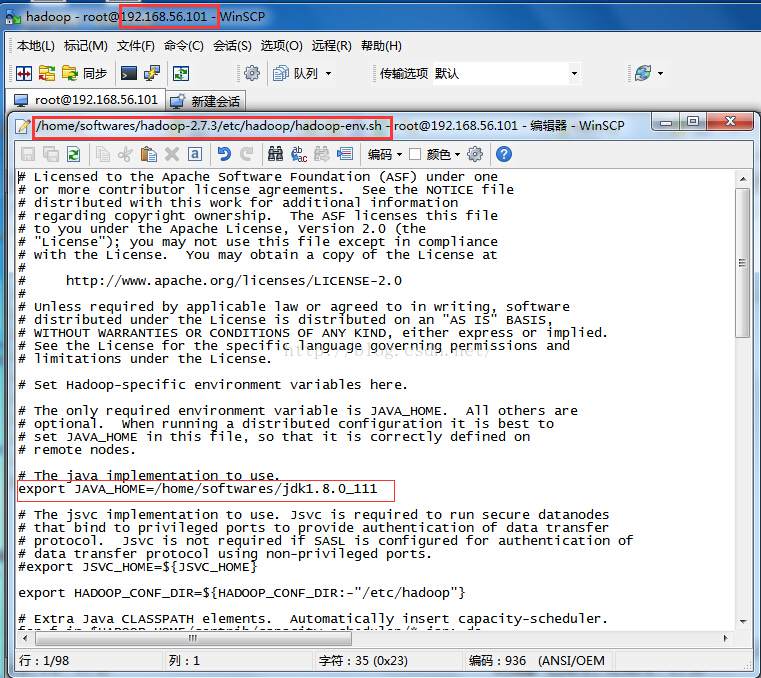

下载hadoop-2.7.3

并将其放在/home/softwares文件夹中。由于hadoop需要jdk的安装环境,所以首先配置/etc/hadoop/hadoop-env.sh的java_home

(ps:感觉我用的jdk版本过高了)

接下来依次修改hadoop相应组件对应的xml

修改core-site.xml :

指定namenode地址

修改hadoop的缓存目录

hadoop的垃圾回收机制

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

<configuration> <property> <name>fsdefaultfs</name> <value>hdfs://101:8020</value> </property> <property> <name>hadooptmpdir</name> <value>/home/softwares/hadoop-3/data/tmp</value> </property> <property> <name>fstrashinterval</name> <value>10080</value> </property> </configuration> |

hdfs-site.xml

设置备份数目

关闭权限

设置http访问接口

设置secondary namenode 的ip地址

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

|

<configuration> <property> <name>dfsreplication</name> <value>3</value> </property> <property> <name>dfspermissionsenabled</name> <value>false</value> </property> <property> <name>dfsnamenodehttp-address</name> <value>101:50070</value> </property> <property> <name>dfsnamenodesecondaryhttp-address</name> <value>103:50090</value> </property> </configuration> |

修改mapred-site.xml.template名字为mapred-site.xml

指定mapreduce的框架为yarn,通过yarn来调度

指定jobhitory

指定jobhitory的web端口

开启uber模式——这是针对mapreduce的优化

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

|

<configuration> <property> <name>mapreduceframeworkname</name> <value>yarn</value> </property> <property> <name>mapreducejobhistoryaddress</name> <value>101:10020</value> </property> <property> <name>mapreducejobhistorywebappaddress</name> <value>101:19888</value> </property> <property> <name>mapreducejobubertaskenable</name> <value>true</value> </property> </configuration> |

修改yarn-site.xml

指定mapreduce为shuffle

指定102节点为resourcemanager

指定102节点的安全代理

开启yarn的日志

指定yarn日志删除时间

指定nodemanager的内存:8g

指定nodemanager的cpu:8核

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

|

<configuration> <!-- site specific yarn configuration properties --> <property> <name>yarnnodemanageraux-services</name> <value>mapreduce_shuffle</value> </property> <property> <name>yarnresourcemanagerhostname</name> <value>102</value> </property> <property> <name>yarnweb-proxyaddress</name> <value>102:8888</value> </property> <property> <name>yarnlog-aggregation-enable</name> <value>true</value> </property> <property> <name>yarnlog-aggregationretain-seconds</name> <value>604800</value> </property> <property> <name>yarnnodemanagerresourcememory-mb</name> <value>8192</value> </property> <property> <name>yarnnodemanagerresourcecpu-vcores</name> <value>8</value> </property> </configuration> |

配置slaves

指定计算节点,即运行datanode和nodemanager的节点

192.168.56.101

192.168.56.102

192.168.56.103

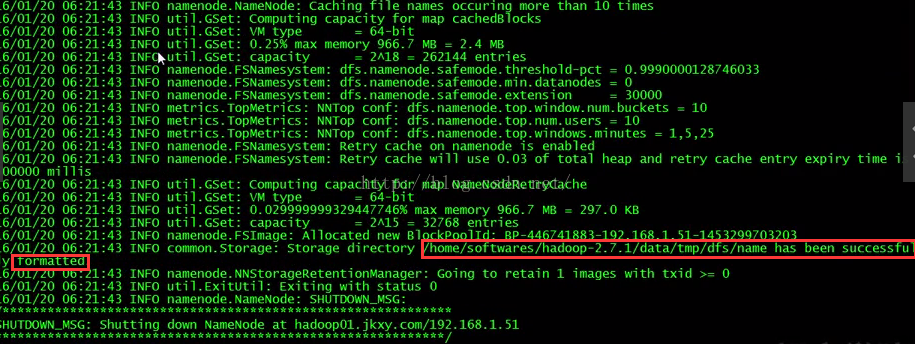

先在namenode节点格式化,即101节点上执行:

进入到hadoop主目录: cd /home/softwares/hadoop-3

执行bin目录下的hadoop脚本: bin/hadoop namenode -format

出现successful format才算是执行成功(ps,这里是盗用别人的图,不要介意哈)

以上配置完成后,将其拷贝到其他的机器

hadoop环境测试

进入hadoop主目录下执行相应的脚本文件

jps命令——java virtual machine process status,显示运行的java进程

在namenode节点101机器上开启hdfs

|

1

2

3

4

5

6

7

8

9

10

11

|

[root@hadoop01 hadoop-3]# sbin/start-dfssh java hotspot(tm) client vm warning: you have loaded library /home/softwares/hadoop-3/lib/native/libhadoopso which might have disabled stack guard the vm will try to fix the stack guard now it's highly recommended that you fix the library with 'execstack -c <libfile>', or link it with '-z noexecstack' 16/11/07 16:49:19 warn utilnativecodeloader: unable to load native-hadoop library for your platform using builtin-java classes where applicable starting namenodes on [hadoop01] hadoop01: starting namenode, logging to /home/softwares/hadoop-3/logs/hadoop-root-namenode-hadoopout102: starting datanode, logging to /home/softwares/hadoop-3/logs/hadoop-root-datanode-hadoopout103: starting datanode, logging to /home/softwares/hadoop-3/logs/hadoop-root-datanode-hadoopout101: starting datanode, logging to /home/softwares/hadoop-3/logs/hadoop-root-datanode-hadoopoutstarting secondary namenodes [hadoop03] hadoop03: starting secondarynamenode, logging to /home/softwares/hadoop-3/logs/hadoop-root-secondarynamenode-hadoopout |

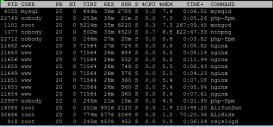

此时101节点上执行jps,可以看到namenode和datanode已经启动

|

1

2

3

4

|

[root@hadoop01 hadoop-3]# jps 7826 jps 7270 datanode 7052 namenode |

在102和103节点执行jps,则可以看到datanode已经启动

|

1

2

3

4

5

6

7

8

|

[root@hadoop02 bin]# jps 4260 datanode 4488 jps [root@hadoop03 ~]# jps 6436 secondarynamenode 6750 jps 6191 datanode |

启动yarn

在102节点执行

|

1

2

3

4

5

6

|

[root@hadoop02 hadoop-3]# sbin/start-yarnsh starting yarn daemons starting resourcemanager, logging to /home/softwares/hadoop-3/logs/yarn-root-resourcemanager-hadoopout101: starting nodemanager, logging to /home/softwares/hadoop-3/logs/yarn-root-nodemanager-hadoopout103: starting nodemanager, logging to /home/softwares/hadoop-3/logs/yarn-root-nodemanager-hadoopout102: starting nodemanager, logging to /home/softwares/hadoop-3/logs/yarn-root-nodemanager-hadoopout |

jps查看各节点:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

|

[root@hadoop02 hadoop-3]# jps 4641 resourcemanager 4260 datanode 4765 nodemanager 5165 jps [root@hadoop01 hadoop-3]# jps 7270 datanode 8375 jps 7976 nodemanager 7052 namenode [root@hadoop03 ~]# jps 6915 nodemanager 6436 secondarynamenode 7287 jps 6191 datanode |

分别启动相应节点的jobhistory和防护进程

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

|

[root@hadoop01 hadoop-3]# sbin/mr-jobhistory-daemonsh start historyserver starting historyserver, logging to /home/softwares/hadoop-3/logs/mapred-root-historyserver-hadoopout[root@hadoop01 hadoop-3]# jps 8624 jps 7270 datanode 7976 nodemanager 8553 jobhistoryserver 7052 namenode [root@hadoop02 hadoop-3]# sbin/yarn-daemonsh start proxyserver starting proxyserver, logging to /home/softwares/hadoop-3/logs/yarn-root-proxyserver-hadoopout[root@hadoop02 hadoop-3]# jps 4641 resourcemanager 4260 datanode 5367 webappproxyserver 5402 jps 4765 nodemanager |

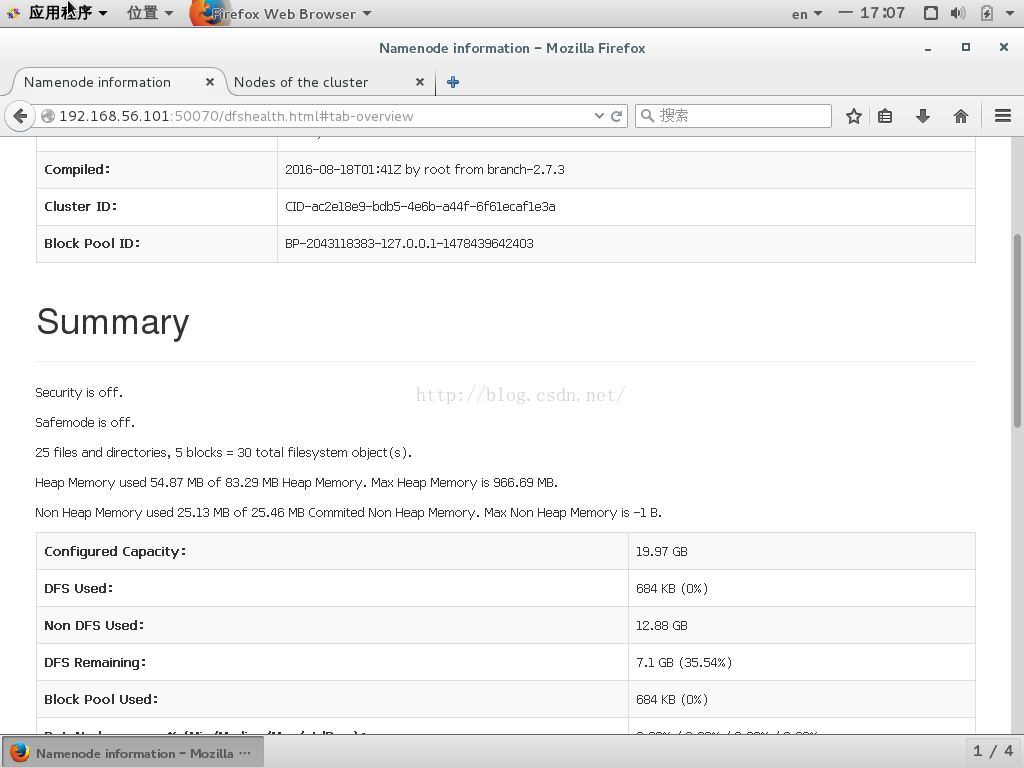

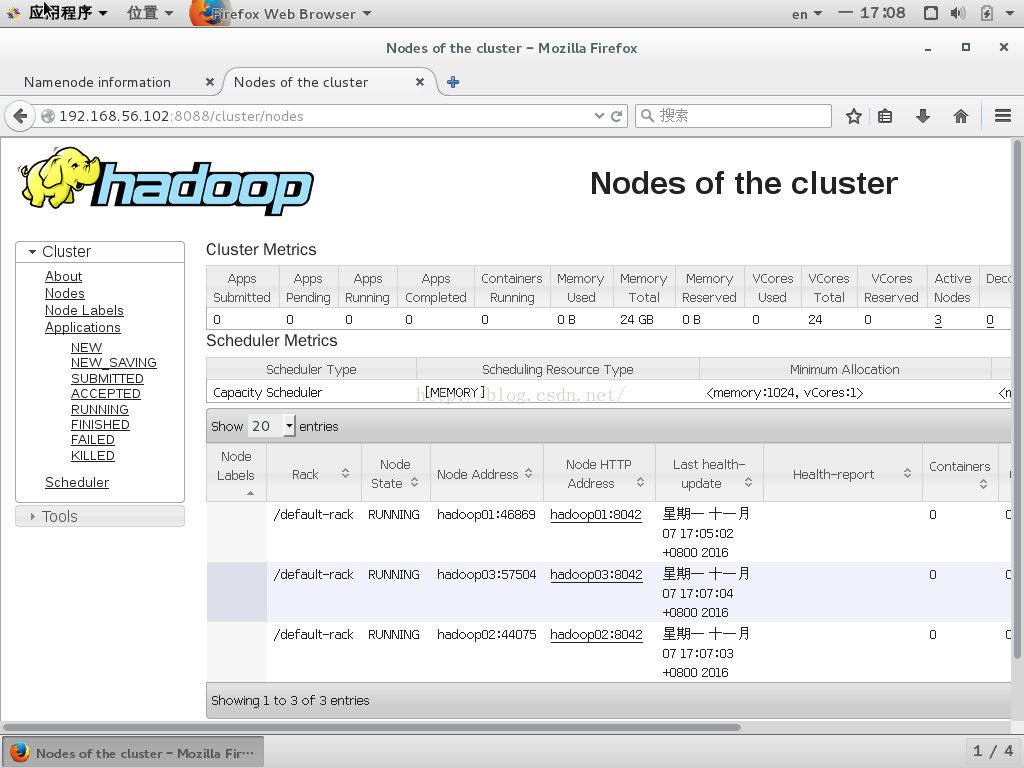

在hadoop01节点,即101节点上,通过浏览器查看节点状况

hdfs上传文件

|

1

|

[root@hadoop01 hadoop-3]# bin/hdfs dfs -put /etc/profile /profile |

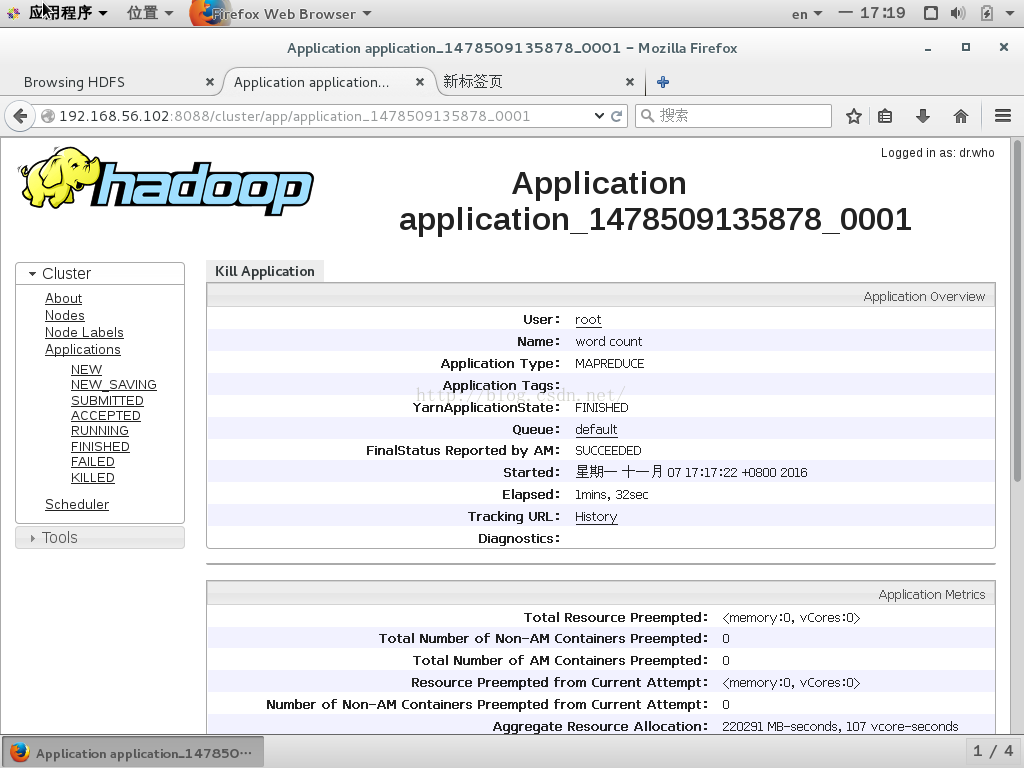

运行wordcount程序

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

|

[root@hadoop01 hadoop-3]# bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-jar wordcount /profile /fll_out java hotspot(tm) client vm warning: you have loaded library /home/softwares/hadoop-3/lib/native/libhadoopso which might have disabled stack guard the vm will try to fix the stack guard now it's highly recommended that you fix the library with 'execstack -c <libfile>', or link it with '-z noexecstack' 16/11/07 17:17:10 warn utilnativecodeloader: unable to load native-hadoop library for your platform using builtin-java classes where applicable 16/11/07 17:17:12 info clientrmproxy: connecting to resourcemanager at /102:8032 16/11/07 17:17:18 info inputfileinputformat: total input paths to process : 1 16/11/07 17:17:19 info mapreducejobsubmitter: number of splits:1 16/11/07 17:17:19 info mapreducejobsubmitter: submitting tokens for job: job_1478509135878_0001 16/11/07 17:17:20 info implyarnclientimpl: submitted application application_1478509135878_0001 16/11/07 17:17:20 info mapreducejob: the url to track the job: http://102:8888/proxy/application_1478509135878_0001/16/11/07 17:17:20 info mapreducejob: running job: job_1478509135878_0001 16/11/07 17:18:34 info mapreducejob: job job_1478509135878_0001 running in uber mode : true16/11/07 17:18:35 info mapreducejob: map 0% reduce 0% 16/11/07 17:18:43 info mapreducejob: map 100% reduce 0% 16/11/07 17:18:50 info mapreducejob: map 100% reduce 100% 16/11/07 17:18:55 info mapreducejob: job job_1478509135878_0001 completed successfully 16/11/07 17:18:59 info mapreducejob: counters: 52 file system counters file: number of bytes read=4264 file: number of bytes written=6412 file: number of read operations=0 file: number of large read operations=0 file: number of write operations=0 hdfs: number of bytes read=3940 hdfs: number of bytes written=261673 hdfs: number of read operations=35 hdfs: number of large read operations=0 hdfs: number of write operations=8 job counters launched map tasks=1 launched reduce tasks=1 other local map tasks=1 total time spent by all maps in occupied slots (ms)=8246 total time spent by all reduces in occupied slots (ms)=7538 total_launched_ubertasks=2 num_uber_submaps=1 num_uber_subreduces=1 total time spent by all map tasks (ms)=8246 total time spent by all reduce tasks (ms)=7538 total vcore-milliseconds taken by all map tasks=8246 total vcore-milliseconds taken by all reduce tasks=7538 total megabyte-milliseconds taken by all map tasks=8443904 total megabyte-milliseconds taken by all reduce tasks=7718912 map-reduce framework map input records=78 map output records=256 map output bytes=2605 map output materialized bytes=2116 input split bytes=99 combine input records=256 combine output records=156 reduce input groups=156 reduce shuffle bytes=2116 reduce input records=156 reduce output records=156 spilled records=312 shuffled maps =1 failed shuffles=0 merged map outputs=1 gc time elapsed (ms)=870 cpu time spent (ms)=1970 physical memory (bytes) snapshot=243326976 virtual memory (bytes) snapshot=2666557440 total committed heap usage (bytes)=256876544 shuffle errors bad_id=0 connection=0 io_error=0 wrong_length=0 wrong_map=0 wrong_reduce=0 file input format counters bytes read=1829 file output format counters bytes written=1487 |

浏览器中通过yarn查看运行状态

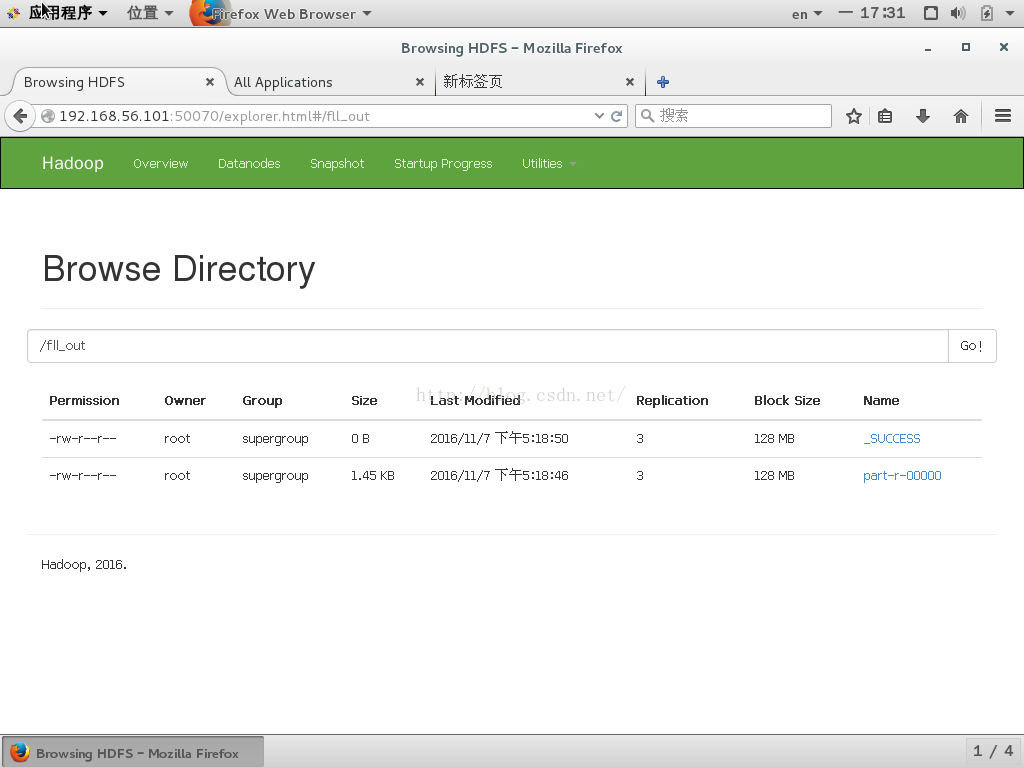

查看最后的词频统计结果

浏览器中查看hdfs的文件系统

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

|

[root@hadoop01 hadoop-3]# bin/hdfs dfs -cat /fll_out/part-r-00000 java hotspot(tm) client vm warning: you have loaded library /home/softwares/hadoop-3/lib/native/libhadoopso which might have disabled stack guard the vm will try to fix the stack guard now it's highly recommended that you fix the library with 'execstack -c <libfile>', or link it with '-z noexecstack' 16/11/07 17:29:17 warn utilnativecodeloader: unable to load native-hadoop library for your platform using builtin-java classes where applicable != 1 "$-" 1 "$2" 1 "$euid" 2 "$histcontrol" 1 "$i" 3 "${-#*i}" 1 "0" 1 ":${path}:" 1 "`id 2 "after" 1 "ignorespace" 1 # 13 $uid 1 && 1 () 1 *) 1 *:"$1":*) 1 -f 1 -gn`" 1 -gt 1 -r 1 -ru` 1 -u` 1 -un`" 2 -x 1 -z 1 2 /etc/bashrc 1 /etc/profile 1 /etc/profiled/ 1 /etc/profiled/*sh 1 /usr/bin/id 1 /usr/local/sbin 2 /usr/sbin 2 /usr/share/doc/setup-*/uidgid 1 002 1 022 1 199 1 200 1 2>/dev/null` 1 ; 3 ;; 1 = 4 >/dev/null 1 by 1 current 1 euid=`id 1 functions 1 histcontrol 1 histcontrol=ignoreboth 1 histcontrol=ignoredups 1 histsize 1 histsize=1000 1 hostname 1 hostname=`/usr/bin/hostname 1 it's 2 java_home=/home/softwares/jdk0_111 1 logname 1 logname=$user 1 mail 1 mail="/var/spool/mail/$user" 1 not 1 path 1 path=$1:$path 1 path=$path:$1 1 path=$path:$java_home/bin 1 path 1 system 1 this 1 uid=`id 1 user 1 user="`id 1 you 1 [ 9 ] 3 ]; 6 a 2 after 2 aliases 1 and 2 are 1 as 1 better 1 case 1 change 1 changes 1 check 1 could 1 create 1 custom 1 customsh 1 default, 1 do 1 doing 1 done 1 else 5 environment 1 environment, 1 esac 1 export 5 fi 8 file 2 for 5 future 1 get 1 go 1 good 1 i 2 idea 1 if 8 in 6 is 1 it 1 know 1 ksh 1 login 2 make 1 manipulation 1 merging 1 much 1 need 1 pathmunge 6 prevent 1 programs, 1 reservation 1 reserved 1 script 1 set 1 sets 1 setup 1 shell 2 startup 1 system 1 the 1 then 8 this 2 threshold 1 to 5 uid/gids 1 uidgid 1 umask 3 unless 1 unset 2 updates 1 validity 1 want 1 we 1 what 1 wide 1 will 1 workaround 1 you 2 your 1 { 1 } 1 |

这就代表hadoop集群正确

以上就是本文的全部内容,希望对大家的学习有所帮助,也希望大家多多支持服务器之家。

原文链接:http://blog.csdn.net/fffllllll/article/details/53066073