在日常爬虫练习中,我们爬取到的数据需要进行保存操作,在scrapy中我们可以使用imagespipeline这个类来进行相关操作,这个类是scrapy已经封装好的了,我们直接拿来用即可。

在使用imagespipeline下载图片数据时,我们需要对其中的三个管道类方法进行重写,其中 — get_media_request 是对图片地址发起请求

— file path 是返回图片名称

— item_completed 返回item,将其返回给下一个即将被执行的管道类

那具体代码是什么样的呢,首先我们需要在pipelines.py文件中,导入imagespipeline类,然后重写上述所说的3个方法:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

|

from scrapy.pipelines.images import imagespipelineimport scrapyimport os class imgspipline(imagespipeline): def get_media_requests(self, item, info): yield scrapy.request(url = item['img_src'],meta={'item':item}) #返回图片名称即可 def file_path(self, request, response=none, info=none): item = request.meta['item'] print('########',item) filepath = item['img_name'] return filepath def item_completed(self, results, item, info): return item |

方法定义好后,我们需要在settings.py配置文件中进行设置,一个是指定图片保存的位置images_store = 'd:\\imgpro',然后就是启用“imgspipline”管道,

|

1

2

3

|

item_pipelines = { 'imgpro.pipelines.imgspipline': 300, #300代表优先级,数字越小优先级越高} |

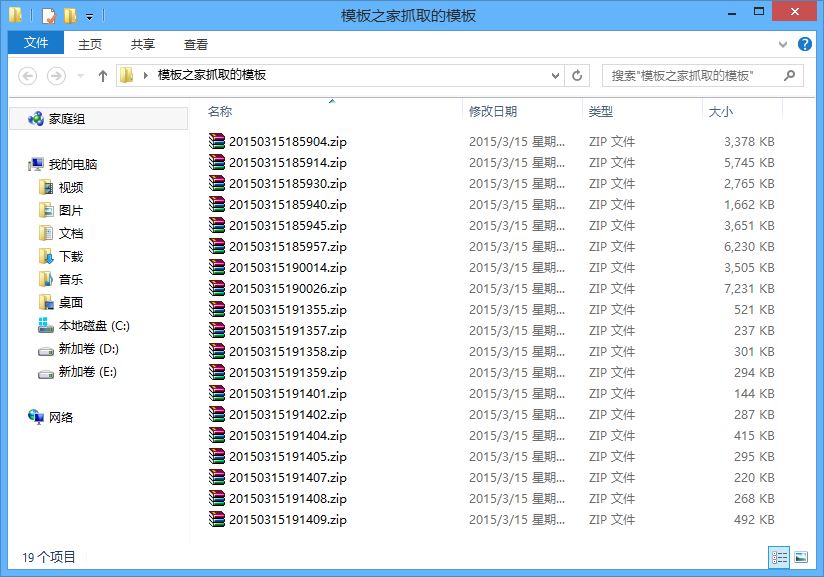

设置完成后,我们运行程序后就可以看到“d:\\imgpro”下保存成功的图片。

完整代码如下:

spider文件代码:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

|

# -*- coding: utf-8 -*-import scrapyfrom imgpro.items import imgproitem class imgspider(scrapy.spider): name = 'img' allowed_domains = ['www.521609.com'] start_urls = ['http://www.521609.com/daxuemeinv/'] def parse(self, response): #解析图片地址和图片名称 li_list = response.xpath('//div[@class="index_img list_center"]/ul/li') for li in li_list: item = imgproitem() item['img_src'] = 'http://www.521609.com/' + li.xpath('./a[1]/img/@src').extract_first() item['img_name'] = li.xpath('./a[1]/img/@alt').extract_first() + '.jpg' # print('***********') # print(item) yield item |

items.py文件

|

1

2

3

4

5

6

7

8

|

import scrapy class imgproitem(scrapy.item): # define the fields for your item here like: # name = scrapy.field() img_src = scrapy.field() img_name = scrapy.field() |

pipelines.py文件

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

|

from scrapy.pipelines.images import imagespipelineimport scrapyimport osfrom imgpro.settings import images_store as imgs class imgspipline(imagespipeline): def get_media_requests(self, item, info): yield scrapy.request(url = item['img_src'],meta={'item':item}) #返回图片名称即可 def file_path(self, request, response=none, info=none): item = request.meta['item'] print('########',item) filepath = item['img_name'] return filepath def item_completed(self, results, item, info): return item |

settings.py文件

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

|

import randombot_name = 'imgpro' spider_modules = ['imgpro.spiders']newspider_module = 'imgpro.spiders' images_store = 'd:\\imgpro' #文件保存路径log_level = "warning"robotstxt_obey = false#设置user-agentuser_agents_list = [ "mozilla/5.0 (windows nt 6.1; wow64) applewebkit/537.1 (khtml, like gecko) chrome/22.0.1207.1 safari/537.1", "mozilla/5.0 (x11; cros i686 2268.111.0) applewebkit/536.11 (khtml, like gecko) chrome/20.0.1132.57 safari/536.11", "mozilla/5.0 (windows nt 6.1; wow64) applewebkit/536.6 (khtml, like gecko) chrome/20.0.1092.0 safari/536.6", "mozilla/5.0 (windows nt 6.2) applewebkit/536.6 (khtml, like gecko) chrome/20.0.1090.0 safari/536.6", "mozilla/5.0 (windows nt 6.2; wow64) applewebkit/537.1 (khtml, like gecko) chrome/19.77.34.5 safari/537.1", "mozilla/5.0 (x11; linux x86_64) applewebkit/536.5 (khtml, like gecko) chrome/19.0.1084.9 safari/536.5", "mozilla/5.0 (windows nt 6.0) applewebkit/536.5 (khtml, like gecko) chrome/19.0.1084.36 safari/536.5", "mozilla/5.0 (windows nt 6.1; wow64) applewebkit/536.3 (khtml, like gecko) chrome/19.0.1063.0 safari/536.3", "mozilla/5.0 (windows nt 5.1) applewebkit/536.3 (khtml, like gecko) chrome/19.0.1063.0 safari/536.3", "mozilla/5.0 (macintosh; intel mac os x 10_8_0) applewebkit/536.3 (khtml, like gecko) chrome/19.0.1063.0 safari/536.3", "mozilla/5.0 (windows nt 6.2) applewebkit/536.3 (khtml, like gecko) chrome/19.0.1062.0 safari/536.3", "mozilla/5.0 (windows nt 6.1; wow64) applewebkit/536.3 (khtml, like gecko) chrome/19.0.1062.0 safari/536.3", "mozilla/5.0 (windows nt 6.2) applewebkit/536.3 (khtml, like gecko) chrome/19.0.1061.1 safari/536.3", "mozilla/5.0 (windows nt 6.1; wow64) applewebkit/536.3 (khtml, like gecko) chrome/19.0.1061.1 safari/536.3", "mozilla/5.0 (windows nt 6.1) applewebkit/536.3 (khtml, like gecko) chrome/19.0.1061.1 safari/536.3", "mozilla/5.0 (windows nt 6.2) applewebkit/536.3 (khtml, like gecko) chrome/19.0.1061.0 safari/536.3", "mozilla/5.0 (x11; linux x86_64) applewebkit/535.24 (khtml, like gecko) chrome/19.0.1055.1 safari/535.24", "mozilla/5.0 (windows nt 6.2; wow64) applewebkit/535.24 (khtml, like gecko) chrome/19.0.1055.1 safari/535.24" ]user_agent = random.choice(user_agents_list)default_request_headers = { 'accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8', 'accept-language': 'en', # 'user-agent':"mozilla/5.0 (windows nt 10.0; win64; x64) applewebkit/537.36 (khtml, like gecko) chrome/58.0.3029.110 safari/537.36", 'user-agent':user_agent} #启动pipeline管道item_pipelines = { 'imgpro.pipelines.imgspipline': 300,} |

以上即是使用imagespipeline下载保存图片的方法,今天突生一个疑惑,爬虫爬的好,真的是牢饭吃的饱吗?还请各位大佬解答!更多相关python scrapy下载保存内容请搜索服务器之家以前的文章或继续浏览下面的相关文章希望大家以后多多支持服务器之家!

原文链接:https://blog.csdn.net/sl01224318/article/details/118873989