前言:大概一年前写的,前段时间跑了下,发现还能用,就分享出来了供大家学习,代码的很多细节不太记得了,也尽力做了优化。

因为毕竟是微博,反爬技术手段还是很周全的,怎么绕过反爬的话要在这说都可以单独写几篇文章了(包括网页动态加载,ajax动态请求,token密钥等等,特别是二级评论,藏得很深,记得当时想了很久才成功拿到),直接上代码。

主要实现的功能:

0.理所应当的,绕过了各种反爬。

1.爬取全部的热搜主要内容。

2.爬取每条热搜的相关微博。

3.爬取每条相关微博的评论,评论用户的各种详细信息。

4.实现了自动翻译,理论上来说,是可以拿下与热搜相关的任何细节,但数据量比较大,推荐使用数据库对这个爬虫程序进行优化(因为当时还没学数据库,不会用,就按照一定格式在本地进行了存储)

(未实现功能):

利用爬取数据构建社交网。可构建python的数据分析,将爬取的用户构成一个社交网络。

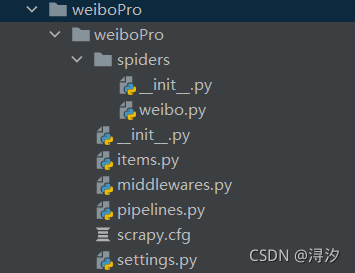

项目结构:

weibo.py

用于爬取需要数据,调用回调分析数据后移交给item,再由item移交给管道进行处理,包括持久化数据等等。

import scrapy

from copy import deepcopy

from time import sleep

import json

from lxml import etree

import re

class WeiboSpider(scrapy.Spider):

name = "weibo"

start_urls = ["https://s.weibo.com/top/summary?Refer=top_hot&topnav=1&wvr=6"]

home_page = "https://s.weibo.com/"

#携带cookie发起请求

def start_requests(self):

cookies = "" #获取一个cookie

cookies = {i.split("=")[0]: i.split("=")[1] for i in cookies.split("; ")}

yield scrapy.Request(

self.start_urls[0],

callback=self.parse,

cookies=cookies

)

#分析热搜和链接

def parse(self, response, **kwargs):

page_text = response.text

with open("first.html","w",encoding="utf-8") as fp:

fp.write(page_text)

item = {}

tr = response.xpath("//*[@id="pl_top_realtimehot"]/table//tr")[1:]

#print(tr)

for t in tr:

item["title"] = t.xpath("./td[2]//text()").extract()[1]

print("title : ",item["title"])

#item["domain_id"] = response.xpath("//input[@id="sid"]/@value").get()

#item["description"] = response.xpath("//div[@id="description"]").get()

detail_url = self.home_page + t.xpath("./td[2]//@href").extract_first()

item["href"] = detail_url

print("href:",item["href"])

#print(item)

#yield item

yield scrapy.Request(detail_url,callback=self.parse_item, meta={"item":deepcopy(item)})

# print("parse完成")

sleep(3)

#print(item)

# item{"title":href,}

#分析每种热搜下的各种首页消息

def parse_item(self, response, **kwargs):

# print("开始parse_item")

item = response.meta["item"]

#print(item)

div_list = response.xpath("//div[@id="pl_feedlist_index"]//div[@class="card-wrap"]")[1:]

#print("--------------")

#print(div_list)

#details_url_list = []

#print("div_list : ",div_list)

#创建名字为标题的文本存储热搜

name = item["title"]

file_path = "./" + name

for div in div_list:

author = div.xpath(".//div[@class="info"]/div[2]/a/@nick-name").extract_first()

brief_con = div.xpath(".//p[@node-type="feed_list_content_full"]//text()").extract()

if brief_con is None:

brief_con = div.xpath(".//p[@class="txt"]//text()").extract()

brief_con = "".join(brief_con)

print("brief_con : ",brief_con)

link = div.xpath(".//p[@class="from"]/a/@href").extract_first()

if author is None or link is None:

continue

link = "https:" + link + "_&type=comment"

news_id = div.xpath("./@mid").extract_first()

print("news_id : ",news_id)

# print(link)

news_time = div.xpath(".//p[@class="from"]/a/text()").extract()

news_time = "".join(news_time)

print("news_time:", news_time)

print("author为:",author)

item["author"] = author

item["news_id"] = news_id

item["news_time"] = news_time

item["brief_con"] = brief_con

item["details_url"] = link

#json链接模板:https://weibo.com/aj/v6/comment/big?ajwvr=6&id=4577307216321742&from=singleWeiBo

link = "https://weibo.com/aj/v6/comment/big?ajwvr=6&id="+ news_id + "&from=singleWeiBo"

# print(link)

yield scrapy.Request(link,callback=self.parse_detail,meta={"item":deepcopy(item)})

#if response.xpath(".//")

#分析每条消息的详情和评论

#https://weibo.com/1649173367/JwjbPDW00?refer_flag=1001030103__&type=comment

#json数据包

#https://weibo.com/aj/v6/comment/big?ajwvr=6&id=4577307216321742&from=singleWeiBo&__rnd=1606879908312

def parse_detail(self, response, **kwargs):

# print("status:",response.status)

# print("ur;:",response.url)

# print("request:",response.request)

# print("headers:",response.headers)

# #print(response.text)

# print("parse_detail开始")

item = response.meta["item"]

all= json.loads(response.text)["data"]["html"]

# #print(all)

with open("3.html","w",encoding="utf-8") as fp:

fp.write(all)

tree = etree.HTML(all)

# print(type(tree))

# username = tree.xpath("//div[@class="list_con"]/div[@class="WB_text"]/a[1]/text()")

# usertime = re.findall("<div class="WB_from S_txt2">(.*?)</div>", all)

# comment = tree.xpath("//div[@class="list_con"]/div[@class="WB_text"]//text()")

# print(usertime)

# #因为评论前有个中文的引号,正则格外的好用

# #comment = re.findall(r"</a>:(.*?)<",all)

# for i in comment:

# for w in i:

# if i == "

":

# comment.pop(i)

# break

# with open("12.txt","w",encoding="utf-8") as fp:

# for i in comment:

# fp.write(i)

# print(comment)

#95-122

div_lists = tree.xpath(".//div[@class="list_con"]")

final_lists = []

#print(div_lists)

with open("13.txt", "a", encoding="utf-8") as fp:

for div in div_lists:

list = []

username = div.xpath("./div[@class="WB_text"]/a[1]/text()")[0]

usertime = div.xpath(".//div[@class="WB_from S_txt2"]/text()")[0]

usercontent = div.xpath("./div[@class="WB_text"]/text()")

str = usertime + "

" + username

#print(username,usertime,usercontent)

# fp.write(usertime + "

" + username)

for con in usercontent[1:]:

str += "

" + username + "

" + usertime + "

" + con + "

"

#

usercontent = "".join(usercontent)

#print("usercontent:",usercontent)

item["username"] = username

item["usertime"] = usertime

item["usercontent"] = usercontent

list.append(username)

list.append(usertime)

list.append(usercontent)

final_lists.append(list)

#item["user"] = [username,usertime,usercontent]

item["user"] = final_lists

yield item

items.py

在这里定义分析的数据,移交给管道处理

import scrapy

class WeiboproItem(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

#热搜标题

#热搜的链接

href = scrapy.Field()

#发布每条相关热搜消息的作者

author = scrapy.Field()

#发布每条相关热搜消息的时间

news_time = scrapy.Field()

#发布每条相关热搜消息的内容

brief_con = scrapy.Field()

#发布每条相关热搜消息的详情链接

details_url = scrapy.Field()

#详情页ID,拿json必备

news_id = scrapy.Field()

#传入每条热搜消息微博详情页下的作者

username = scrapy.Field()

#传入每条热搜消息微博详情页下的时间

usertime = scrapy.Field()

#传入每条热搜消息微博详情页下的评论

usercontent = scrapy.Field()

#所有评论和人

user = scrapy.Field()

middlewares.py

中间件,用于处理spider和服务器中间的通讯。

import random

# 自定义微博请求的中间件

class WeiboproDownloaderMiddleware(object):

def process_request(self, request, spider):

# "设置cookie"

cookies = ""

cookies = {i.split("=")[0]: i.split("=")[1] for i in cookies.split("; ")}

request.cookies = cookies

# 设置ua

ua = random.choice(spider.settings.get("USER_AGENT_LIST"))

request.headers["User-Agent"] = ua

return None

pipelines.py

from itemadapter import ItemAdapter

class WeiboproPipeline:

fp = None

def open_spider(self,spider):

print("starting...")

def process_item(self, item, spider):

href = item["href"]

author = item["author"]

news_time = item["news_time"]

brief_con = item["brief_con"]

details_url = item["details_url"]

news_id = item["news_id"]

#username = item["username"]

#usertime = item["usertime"]

#usercontent = item["usercontent"]

user = item["user"]

filepath = "./" + title + ".txt"

with open(filepath,"a",encoding="utf-8") as fp:

fp.write("title:

" + title + "

" + "href:

"+href + "

" +"author:

" + author + "

" + "news_time:

" +news_time + "

" + "brief_con

" + brief_con + "

" +"details_url:

" + details_url + "

" +"news_id"+news_id + "

")

for u in user:

fp.write("username:"+u[0] + "

" + u[1] + "

" +"usercontent:

"+u[2] + "

")

fp.write("---------------------------------------------------------

")

fp.close()

return item

setting.py

设置spider的属性,包括在这里已经加入了各种浏览器请求头,设置线程数,爬取频率等等,能够让spider拥有更强大的反爬

# Scrapy settings for weiboPro project

#

# For simplicity, this file contains only settings considered important or

# commonly used. You can find more settings consulting the documentation:

#

# https://docs.scrapy.org/en/latest/topics/settings.html

# https://docs.scrapy.org/en/latest/topics/downloader-middleware.html

# https://docs.scrapy.org/en/latest/topics/spider-middleware.html

BOT_NAME = "weiboPro"

SPIDER_MODULES = ["weiboPro.spiders"]

NEWSPIDER_MODULE = "weiboPro.spiders"

# Crawl responsibly by identifying yourself (and your website) on the user-agent

#USER_AGENT = "weiboPro (+http://www.yourdomain.com)"

MEDIA_ALLOW_REDIRECTS = True

USER_AGENT_LIST = ["Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/39.0.2171.95 Safari/537.36 OPR/26.0.1656.60",

"Opera/8.0 (Windows NT 5.1; U; en)",

"Mozilla/5.0 (Windows NT 5.1; U; en; rv:1.8.1) Gecko/20061208 Firefox/2.0.0 Opera 9.50",

"Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; en) Opera 9.50",

# Firefox

"Mozilla/5.0 (Windows NT 6.1; WOW64; rv:34.0) Gecko/20100101 Firefox/34.0",

"Mozilla/5.0 (X11; U; Linux x86_64; zh-CN; rv:1.9.2.10) Gecko/20100922 Ubuntu/10.10 (maverick) Firefox/3.6.10",

# Safari

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/534.57.2 (KHTML, like Gecko) Version/5.1.7 Safari/534.57.2",

# chrome

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/39.0.2171.71 Safari/537.36",

"Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.11 (KHTML, like Gecko) Chrome/23.0.1271.64 Safari/537.11",

"Mozilla/5.0 (Windows; U; Windows NT 6.1; en-US) AppleWebKit/534.16 (KHTML, like Gecko) Chrome/10.0.648.133 Safari/534.16",

# 360

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/30.0.1599.101 Safari/537.36",

"Mozilla/5.0 (Windows NT 6.1; WOW64; Trident/7.0; rv:11.0) like Gecko",

# 淘宝浏览器

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.11 (KHTML, like Gecko) Chrome/20.0.1132.11 TaoBrowser/2.0 Safari/536.11",

# 猎豹浏览器

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.1 (KHTML, like Gecko) Chrome/21.0.1180.71 Safari/537.1 LBBROWSER",

"Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; WOW64; Trident/5.0; SLCC2; .NET CLR 2.0.50727; .NET CLR 3.5.30729; .NET CLR 3.0.30729; Media Center PC 6.0; .NET4.0C; .NET4.0E; LBBROWSER)",

"Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; QQDownload 732; .NET4.0C; .NET4.0E; LBBROWSER)",

# QQ浏览器

"Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; WOW64; Trident/5.0; SLCC2; .NET CLR 2.0.50727; .NET CLR 3.5.30729; .NET CLR 3.0.30729; Media Center PC 6.0; .NET4.0C; .NET4.0E; QQBrowser/7.0.3698.400)",

"Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; QQDownload 732; .NET4.0C; .NET4.0E)",

# sogou浏览器

"Mozilla/5.0 (Windows NT 5.1) AppleWebKit/535.11 (KHTML, like Gecko) Chrome/17.0.963.84 Safari/535.11 SE 2.X MetaSr 1.0",

"Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1; Trident/4.0; SV1; QQDownload 732; .NET4.0C; .NET4.0E; SE 2.X MetaSr 1.0)",

# maxthon浏览器

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Maxthon/4.4.3.4000 Chrome/30.0.1599.101 Safari/537.36",

# UC浏览器

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/38.0.2125.122 UBrowser/4.0.3214.0 Safari/537.36"

]

LOG_LEVEL = "ERROR"

# Obey robots.txt rules

ROBOTSTXT_OBEY = False

# Configure maximum concurrent requests performed by Scrapy (default: 16)

#CONCURRENT_REQUESTS = 32

# Configure a delay for requests for the same website (default: 0)

# See https://docs.scrapy.org/en/latest/topics/settings.html#download-delay

# See also autothrottle settings and docs

#DOWNLOAD_DELAY = 3

# The download delay setting will honor only one of:

#CONCURRENT_REQUESTS_PER_DOMAIN = 16

#CONCURRENT_REQUESTS_PER_IP = 16

# Disable cookies (enabled by default)

#COOKIES_ENABLED = False

# Disable Telnet Console (enabled by default)

#TELNETCONSOLE_ENABLED = False

# Override the default request headers:

#DEFAULT_REQUEST_HEADERS = {

# "Accept": "text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8",

# "Accept-Language": "en",

#}

# Enable or disable spider middlewares

# See https://docs.scrapy.org/en/latest/topics/spider-middleware.html

# SPIDER_MIDDLEWARES = {

# "weiboPro.middlewares.WeiboproSpiderMiddleware": 543,

# }

# Enable or disable downloader middlewares

# See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html

DOWNLOADER_MIDDLEWARES = {

"weiboPro.middlewares.WeiboproDownloaderMiddleware": 543,

}

# Enable or disable extensions

# See https://docs.scrapy.org/en/latest/topics/extensions.html

#EXTENSIONS = {

# "scrapy.extensions.telnet.TelnetConsole": None,

#}

# Configure item pipelines

# See https://docs.scrapy.org/en/latest/topics/item-pipeline.html

ITEM_PIPELINES = {

"weiboPro.pipelines.WeiboproPipeline": 300,

}

# Enable and configure the AutoThrottle extension (disabled by default)

# See https://docs.scrapy.org/en/latest/topics/autothrottle.html

#AUTOTHROTTLE_ENABLED = True

# The initial download delay

#AUTOTHROTTLE_START_DELAY = 5

# The maximum download delay to be set in case of high latencies

#AUTOTHROTTLE_MAX_DELAY = 60

# The average number of requests Scrapy should be sending in parallel to

# each remote server

#AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0

# Enable showing throttling stats for every response received:

#AUTOTHROTTLE_DEBUG = False

# Enable and configure HTTP caching (disabled by default)

# See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings

#HTTPCACHE_ENABLED = True

#HTTPCACHE_EXPIRATION_SECS = 0

#HTTPCACHE_DIR = "httpcache"

#HTTPCACHE_IGNORE_HTTP_CODES = []

#HTTPCACHE_STORAGE = "scrapy.extensions.httpcache.FilesystemCacheStorage"

scrapy.cfg

配置文件,没啥好写的

[settings] default = weiboPro.settings [deploy] #url = http://localhost:6800/ project = weiboPro

剩下的两个__init__文件空着就行,用不上。

到此这篇关于python实战之Scrapy框架爬虫爬取微博热搜的文章就介绍到这了,更多相关python Scrapy 爬取微博热搜内容请搜索服务器之家以前的文章或继续浏览下面的相关文章希望大家以后多多支持服务器之家!

原文链接:https://blog.csdn.net/qq_29003363/article/details/120394580