简介

自适应池化Adaptive Pooling是PyTorch含有的一种池化层,在PyTorch的中有六种形式:

自适应最大池化Adaptive Max Pooling:

torch.nn.AdaptiveMaxPool1d(output_size)

torch.nn.AdaptiveMaxPool2d(output_size)

torch.nn.AdaptiveMaxPool3d(output_size)

自适应平均池化Adaptive Average Pooling:

torch.nn.AdaptiveAvgPool1d(output_size)

torch.nn.AdaptiveAvgPool2d(output_size)

torch.nn.AdaptiveAvgPool3d(output_size)

具体可见官方文档。

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

|

官方给出的例子:>>> # target output size of 5x7>>> m = nn.AdaptiveMaxPool2d((5,7))>>> input = torch.randn(1, 64, 8, 9)>>> output = m(input)>>> output.size()torch.Size([1, 64, 5, 7])>>> # target output size of 7x7 (square)>>> m = nn.AdaptiveMaxPool2d(7)>>> input = torch.randn(1, 64, 10, 9)>>> output = m(input)>>> output.size()torch.Size([1, 64, 7, 7])>>> # target output size of 10x7>>> m = nn.AdaptiveMaxPool2d((None, 7))>>> input = torch.randn(1, 64, 10, 9)>>> output = m(input)>>> output.size()torch.Size([1, 64, 10, 7]) |

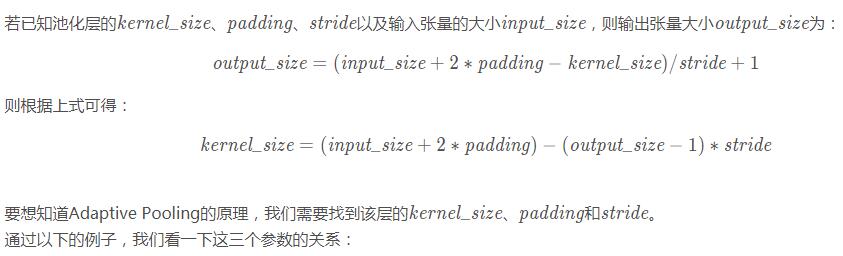

Adaptive Pooling特殊性在于,输出张量的大小都是给定的output_size output\_sizeoutput_size。例如输入张量大小为(1, 64, 8, 9),设定输出大小为(5,7),通过Adaptive Pooling层,可以得到大小为(1, 64, 5, 7)的张量。

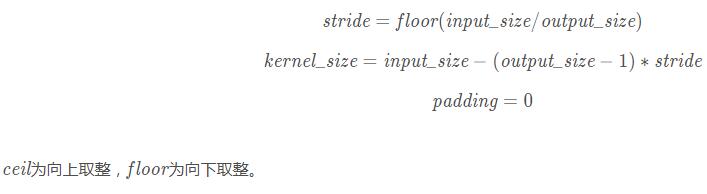

原理

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

|

>>> inputsize = 9>>> outputsize = 4>>> input = torch.randn(1, 1, inputsize)>>> inputtensor([[[ 1.5695, -0.4357, 1.5179, 0.9639, -0.4226, 0.5312, -0.5689, 0.4945, 0.1421]]])>>> m1 = nn.AdaptiveMaxPool1d(outputsize)>>> m2 = nn.MaxPool1d(kernel_size=math.ceil(inputsize / outputsize), stride=math.floor(inputsize / outputsize), padding=0)>>> output1 = m1(input)>>> output2 = m2(input)>>> output1tensor([[[1.5695, 1.5179, 0.5312, 0.4945]]]) torch.Size([1, 1, 4])>>> output2tensor([[[1.5695, 1.5179, 0.5312, 0.4945]]]) torch.Size([1, 1, 4]) |

通过实验发现:

下面是Adaptive Average Pooling的c++源码部分。

下面是Adaptive Average Pooling的c++源码部分。

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

|

template <typename scalar_t> static void adaptive_avg_pool2d_out_frame( scalar_t *input_p, scalar_t *output_p, int64_t sizeD, int64_t isizeH, int64_t isizeW, int64_t osizeH, int64_t osizeW, int64_t istrideD, int64_t istrideH, int64_t istrideW) { int64_t d; #pragma omp parallel for private(d) for (d = 0; d < sizeD; d++) { /* loop over output */ int64_t oh, ow; for(oh = 0; oh < osizeH; oh++) { int istartH = start_index(oh, osizeH, isizeH); int iendH = end_index(oh, osizeH, isizeH); int kH = iendH - istartH; for(ow = 0; ow < osizeW; ow++) { int istartW = start_index(ow, osizeW, isizeW); int iendW = end_index(ow, osizeW, isizeW); int kW = iendW - istartW; /* local pointers */ scalar_t *ip = input_p + d*istrideD + istartH*istrideH + istartW*istrideW; scalar_t *op = output_p + d*osizeH*osizeW + oh*osizeW + ow; /* compute local average: */ scalar_t sum = 0; int ih, iw; for(ih = 0; ih < kH; ih++) { for(iw = 0; iw < kW; iw++) { scalar_t val = *(ip + ih*istrideH + iw*istrideW); sum += val; } } /* set output to local average */ *op = sum / kW / kH; } } }} |

以上这篇PyTorch的自适应池化Adaptive Pooling实例就是小编分享给大家的全部内容了,希望能给大家一个参考,也希望大家多多支持服务器之家。

原文链接:https://blog.csdn.net/u013382233/article/details/85948695